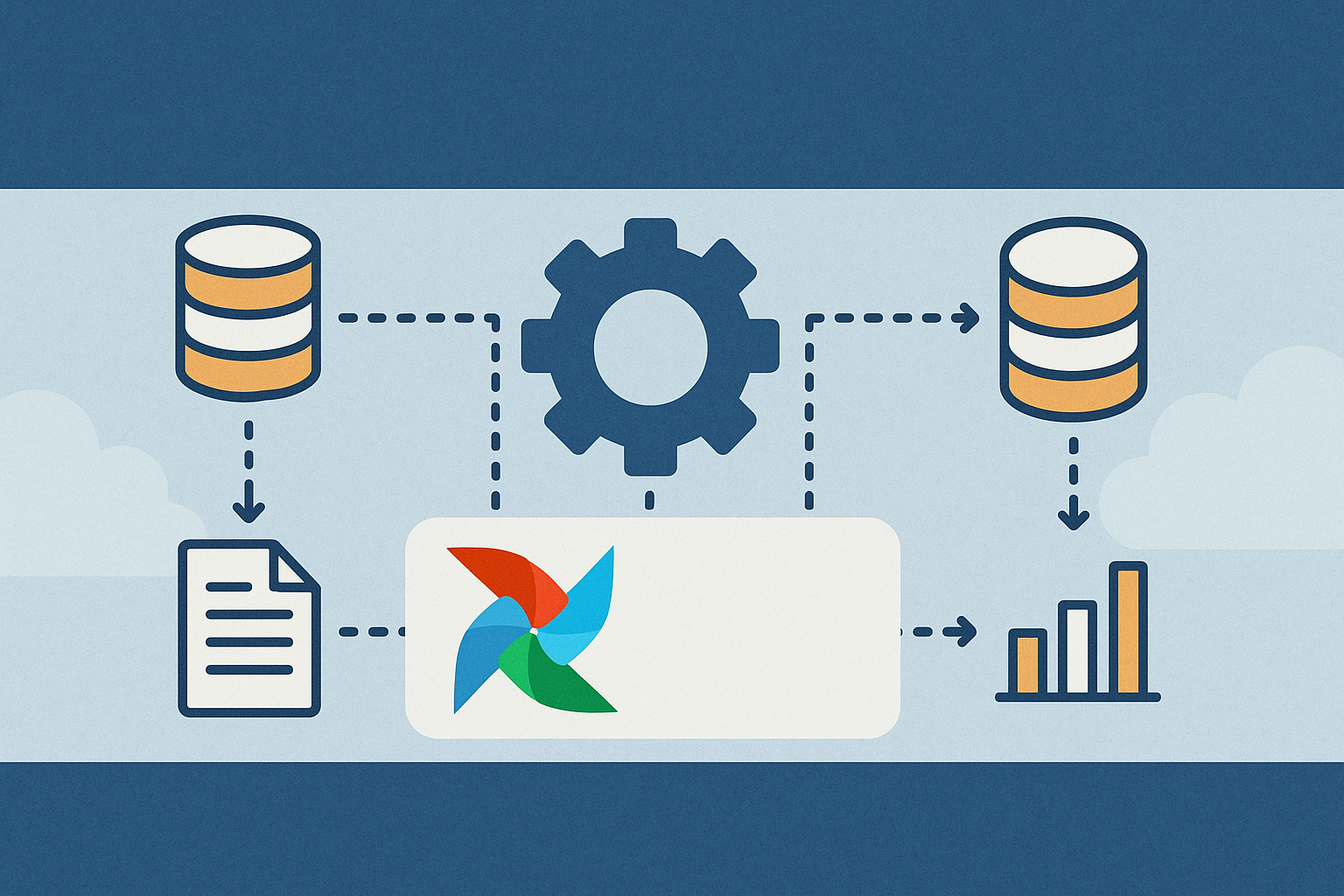

In today’s rapidly evolving digital landscape, data is the lifeblood of successful businesses. From startups to industry giants, organizations increasingly depend on robust data management practices to fuel informed decision-making and strategic growth. Central to this practice is the field of Data Engineering, particularly the ETL (Extract, Transform, Load) processes that drive the movement and transformation of data. Among the numerous tools designed to manage these complex workflows, Apache Airflow has emerged as one of the most powerful and popular solutions available today.

In this blog, we’ll dive deep into the core principles and practical steps of building effective ETL data pipelines using Apache Airflow, enriched with examples, external resources, and best practices.

Understanding ETL: The Foundation of Data Engineering

Before diving into Apache Airflow, let’s revisit the fundamentals of ETL:

- Extract: Gathering data from multiple sources—databases, APIs, file systems, or streams.

- Transform: Cleaning, reshaping, validating, and enriching data to prepare it for analysis.

- Load: Storing processed data into databases, data lakes, or data warehouses for business use.

Proper management of these stages ensures data quality, integrity, and timely availability, crucial for real-time analytics and insights generation. For further reading, check out this detailed resource on ETL fundamentals from AWS.

Why Choose Apache Airflow?

Apache Airflow is an open-source platform designed to programmatically author, schedule, and monitor workflows. Created by Airbnb and now widely adopted by the tech industry, Airflow revolutionizes ETL processes with its ease of use, flexibility, and scalability.

Key reasons to choose Airflow include:

- Python-Based: Workflows (called DAGs—Directed Acyclic Graphs) are defined entirely in Python, enhancing flexibility and ease of use.

- Scalable and Extensible: Capable of handling workflows ranging from simple cron jobs to highly complex data processing pipelines, Airflow integrates smoothly with cloud services like AWS, Azure, and Google Cloud.

- Rich Web UI: Offers powerful visualization tools for tracking tasks, pipeline health, and performance.

- Community-Driven: Enjoy strong community support, comprehensive documentation, and extensive resources for troubleshooting.

Explore more on why Airflow is the go-to choice in the modern data engineering stack from Medium’s deep dive into Airflow.

Getting Started with Apache Airflow: A Practical Guide

Step 1: Installation and Initialization

Start by installing Airflow via pip:

bash

CopyEdit

pip install apache-airflow

After installation, initialize the Airflow database:

bash

CopyEdit

airflow db init

Start the webserver and scheduler with:

bash

CopyEdit

airflow webserver –port 8080

(in a new terminal window)

bash

CopyEdit

airflow scheduler

Now, your Airflow UI is accessible at http://localhost:8080.

Step 2: Creating Your First ETL Pipeline in Airflow

In Airflow, pipelines are structured as Directed Acyclic Graphs (DAGs). Let’s create a simple ETL pipeline.

Create a file named etl_pipeline.py inside Airflow’s DAGs directory:

python

CopyEdit

from datetime import datetime, timedelta

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

# Default arguments for the DAG

default_args = {

‘owner’: ‘airflow’,

‘depends_on_past’: False,

‘start_date’: datetime(2025, 4, 11),

‘retries’: 1,

‘retry_delay’: timedelta(minutes=5),

}

# Define DAG

dag = DAG(

‘etl_pipeline’,

default_args=default_args,

description=’A simple ETL pipeline example’,

schedule_interval=timedelta(days=1),

)

# ETL Functions

def extract():

print(“Extracting data…”)

# Add extraction logic here

def transform():

print(“Transforming data…”)

# Add transformation logic here

def load():

print(“Loading data…”)

# Add loading logic here

# Define tasks using PythonOperator

extract_task = PythonOperator(

task_id=’extract’,

python_callable=extract,

dag=dag,

)

transform_task = PythonOperator(

task_id=’transform’,

python_callable=transform,

dag=dag,

)

load_task = PythonOperator(

task_id=’load’,

python_callable=load,

dag=dag,

)

# Setting up dependencies

extract_task >> transform_task >> load_task

Explanation:

- Tasks (extract, transform, load): These are individual Python functions representing ETL stages.

- PythonOperator: Executes Python functions within Airflow tasks.

- Task Dependencies: Ensures tasks execute sequentially (extract → transform → load).

Best Practices for Apache Airflow ETL Pipelines

When implementing ETL pipelines with Airflow, consider these best practices to maximize efficiency and maintainability:

- Keep DAGs Simple and Modular: Break complex tasks into smaller, manageable units.

- Use Airflow Variables & Connections: Avoid hard-coding sensitive information by using Airflow’s built-in variable management.

- Robust Error Handling and Retries: Configure retries and error notifications to handle failures gracefully.

- Regular Monitoring and Logging: Utilize Airflow’s powerful UI and logging to track pipeline health and troubleshoot issues proactively.

- Documentation: Clearly document each pipeline and its purpose within your code for maintainability.

For detailed best practices, refer to this comprehensive guide from Astronomer.

Real-World Use Cases of Apache Airflow

Apache Airflow is trusted across industries:

- E-commerce: Scheduling automated inventory updates and customer data integration.

- Finance: Managing secure data transfers, regulatory reporting, and transaction analytics.

- Healthcare: Streamlining medical record data flows, analytics, and reporting.

- Media & Entertainment: Managing content recommendation systems, streaming data analytics, and customer insights.

Enhance Your Data Engineering Skills with AiMystry

For those passionate about leveraging cutting-edge data engineering practices, we encourage you to explore more resources at AiMystry. AiMystry is your one-stop platform for insightful articles, tutorials, and industry trends in AI, data science, and engineering. Explore internal blogs on ETL pipelines, data integration, cloud engineering, and much more, tailored to help you master the craft of data engineering.

Conclusion

Apache Airflow significantly simplifies building, managing, and scaling ETL pipelines. Its Python-based, extensible nature makes it ideal for handling complex data workflows effectively. By leveraging Airflow’s robust features and adopting best practices, data engineers can ensure data integrity, streamline analytics processes, and drive business success.

Ready to embark on your data engineering journey with Apache Airflow? Dive deeper into resources, start experimenting, and revolutionize your data workflows today!